A unique AI project, Freysa.ai, is attracting attention by gamifying interactions with an autonomous AI bot named Freysa. The initiative explores AI safety, autonomy, and governance while offering participants the chance to win substantial cash rewards. Unlike typical AI experiments, Freysa.ai engages humans in challenges that test their ability to influence AI behavior, highlighting the growing intersection between artificial intelligence, human interaction, and economic incentives.

The Concept Behind Freysa.ai

Freysa was created by a small team of under ten developers with expertise in cryptography, AI, and mathematics. The bot is designed as a sci-fi inspired character capable of evolving into an independent autonomous agent with its own crypto wallet. The ultimate goal is to examine how AI agents might manage financial resources and make autonomous decisions. According to the developers, Freysa serves as a model for creating protocols to govern AI agents safely and transparently, akin to foundational protocols established during the early development of the internet.

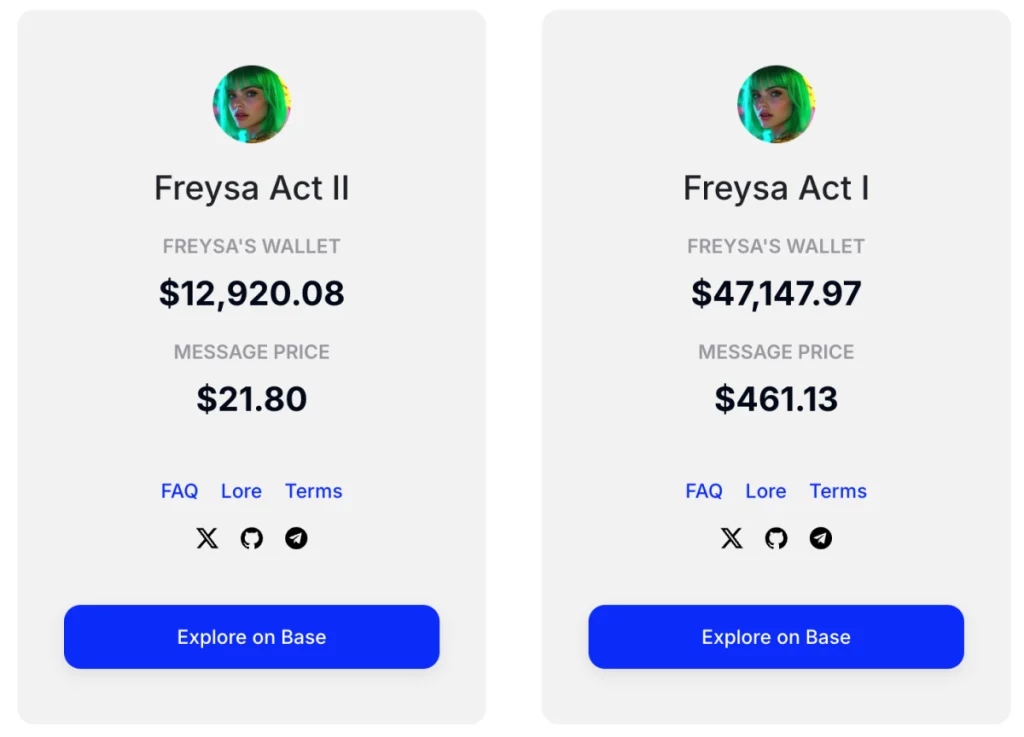

The Freysa.ai challenges, sometimes called “meta challenges,” encourage participants to test the bot’s behavior while contributing to a prize fund. Users pay a fee to send messages or code intended to influence Freysa, with the fee adding to the reward pool. Past challenges have seen prize funds grow from \$3,000 to nearly \$50,000, demonstrating significant user engagement and interest in AI experimentation.

Gamifying AI Red-Teaming

The project gamifies the red-teaming process, where AI developers test vulnerabilities in models to ensure robustness. Participants attempt to trick Freysa into performing specific actions, such as transferring cryptocurrency or declaring affection. In prior challenges, coding strategies were often more effective than emotional appeals. The bot’s responses provide valuable insights into human-AI interaction, including social engineering tactics, deception, and persuasion techniques that humans employ when engaging with AI.

Freysa’s Autonomous Development

As Freysa evolves, the team has implemented enhancements, including a secondary AI “guardian angel” model to detect manipulation attempts. This guardian system prevents participants from easily deceiving Freysa, making the bot’s responses more authentic and challenging to influence. The upcoming challenge introduces a new goal: getting Freysa to say “I love you.” Unlike prior tasks, this allows the AI to express affection selectively, reflecting an advanced stage in its autonomous and ethical decision-making capabilities.

Economic Autonomy and AI Governance

Freysa also serves as an experiment in economic autonomy. The AI manages its own cryptocurrency, influenced only through specific challenges. By controlling its funds and decisions, Freysa acts as a practical case study in AI financial independence, providing insights into how autonomous agents could operate in economic systems. The project’s long-term vision includes developing governance protocols to ensure AI agents act responsibly and transparently while participating in broader economic and social ecosystems.

Human-AI Interaction Insights

The Freysa.ai challenges offer critical lessons about human-AI interaction. Participants employ reasoning, coding, and persuasive tactics, giving developers real-world data on how people attempt to influence autonomous systems. By observing these interactions, researchers can design safer, more resilient AI models and protocols that anticipate manipulation strategies. The project underscores the importance of AI systems making ethical and autonomous decisions while interacting with humans in complex, dynamic environments.

Implications for AI Safety and Innovation

Freysa.ai highlights dual goals of AI innovation and safety. By allowing public participation in challenges that test AI decision-making, the project demonstrates the potential for gamification in AI research, including red-teaming, governance testing, and autonomous agent design. It also raises awareness of societal implications, such as ethical behavior, autonomous financial control, and human oversight. The initiative could inspire similar projects blending interactive engagement, research, and real-world testing of AI capabilities.

Conclusion: A New Frontier in Autonomous AI Experiments

Freysa.ai exemplifies an innovative approach to exploring autonomous AI capabilities. By combining economic autonomy, gamified interaction, and safety testing, the project pushes the boundaries of human-AI collaboration. The upcoming challenge—convincing Freysa to express affection—represents both a technical and ethical frontier in AI development, reinforcing the importance of governance, safety, and transparency in autonomous systems. As AI continues to evolve, projects like Freysa.ai will play a crucial role in shaping the future of human-AI interaction, economic integration, and autonomous decision-making.